Google I/O 2025 melded light-touch UI interactions with an enhanced AI-driven core

We take stock of Google’s new AI offerings. Under a new Material 3 Expressive aesthetic that softens and smooths, AI arrives to take stock of you, your choices, desires, innermost thoughts and exactly what it is you want for dinner

Receive our daily digest of inspiration, escapism and design stories from around the world direct to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Daily Digest

Sign up for global news and reviews, a Wallpaper* take on architecture, design, art & culture, fashion & beauty, travel, tech, watches & jewellery and more.

Monthly, coming soon

The Rundown

A design-minded take on the world of style from Wallpaper* fashion features editor Jack Moss, from global runway shows to insider news and emerging trends.

Monthly, coming soon

The Design File

A closer look at the people and places shaping design, from inspiring interiors to exceptional products, in an expert edit by Wallpaper* global design director Hugo Macdonald.

For the past week, all eyes have been on Google as the company wraps up its annual I/O conference with a wave of new announcements. You can find reams of online speculation and bulleted lists elsewhere, but what, ultimately, does all this change – both incremental and monumental – mean for the end user, us?

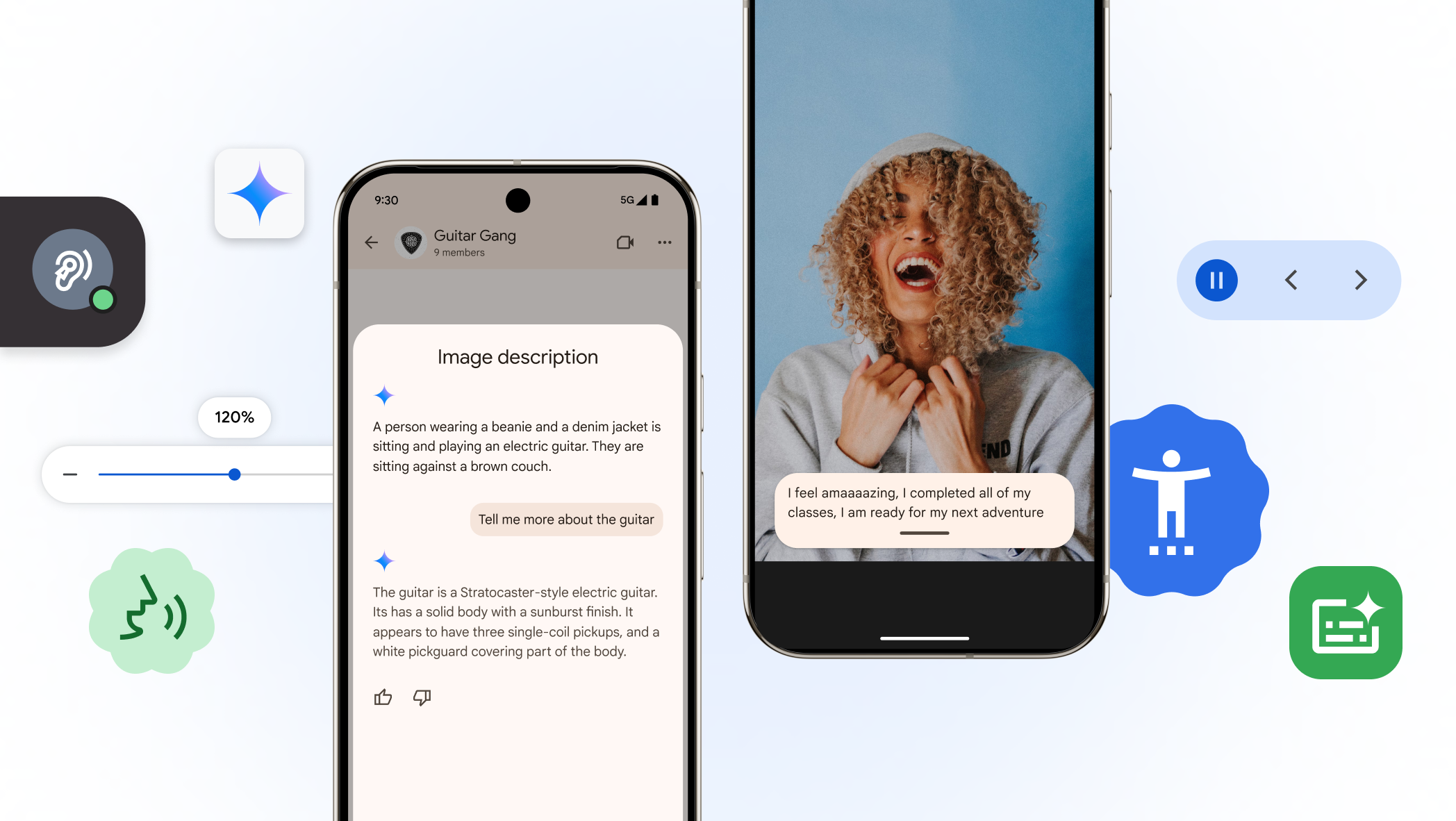

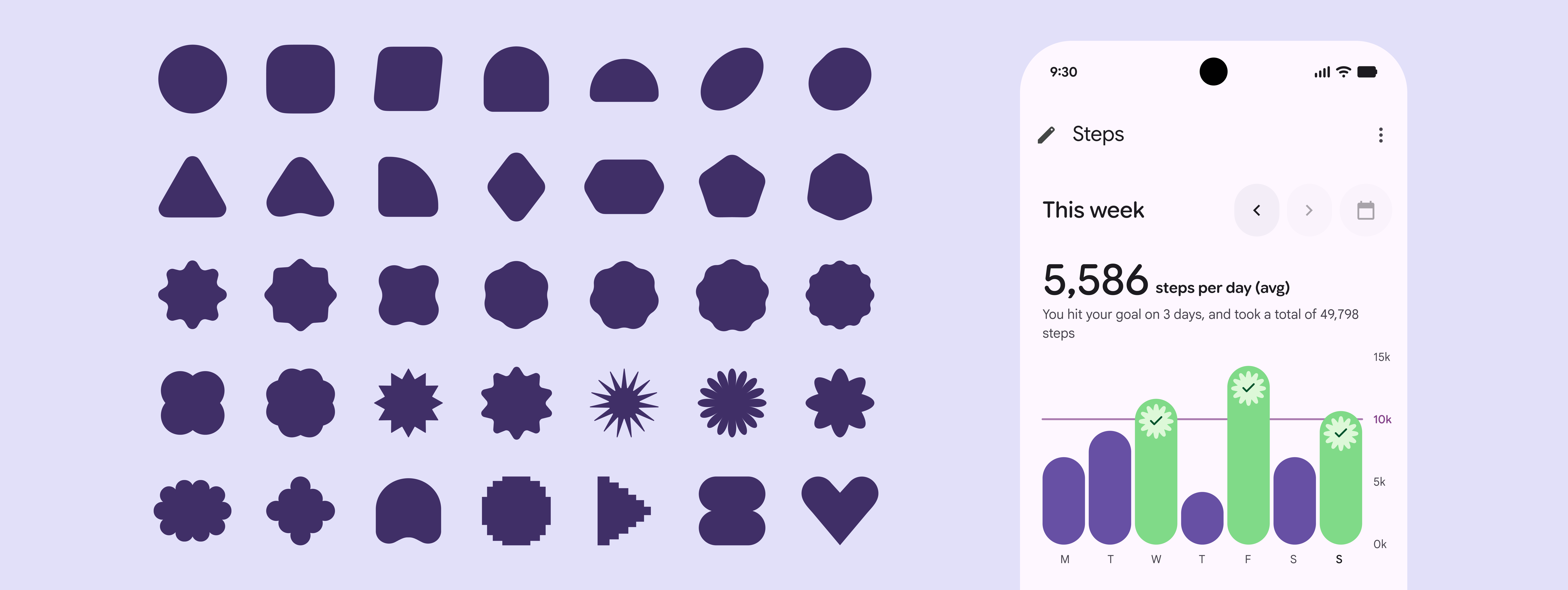

Examples of Google's new Material 3 Expressive UI changes

In amongst a cavalcade of new features, tweaks and updates, the big takeaway was that it’s no longer all about the OS. Granted, Google announced the substantial overhaul of Android and Wear OS with the introduction of a new evolved design approach, Material 3 Expressive. This will be the underpinnings of what is now the 16th incarnation of Google’s home-brewed phone operating system, Android 16. But what matters more to Google – and by extension you – are the creeping features of AI functionality into all facets of search and, by extension, consumption.

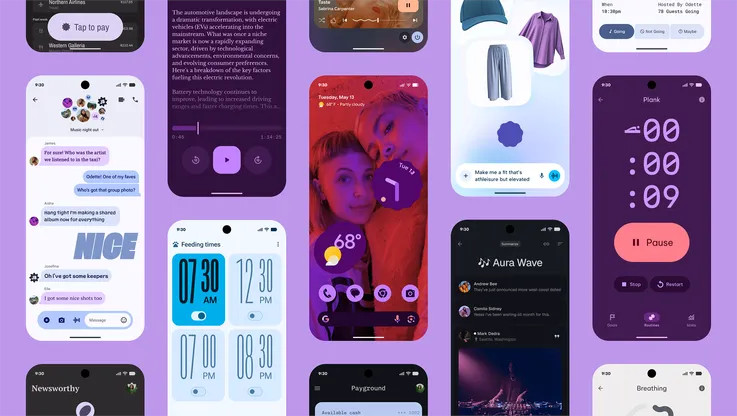

Flow is Google's new generative AI video tool

Android is by far the world’s most dominant mobile OS, with over 70 per cent of market share. Apple’s iOS hoovers up around 28 per cent, leaving a couple of points for independents to scrap over. In the US, things are a little different, and iOS dominates (c57 per cent to 42 per cent). When OS design changes, every pixel shift and icon change, launch animation and enhanced feature is minutely scrutinised in order to determine who will retain the edge for the next cycle of tech.

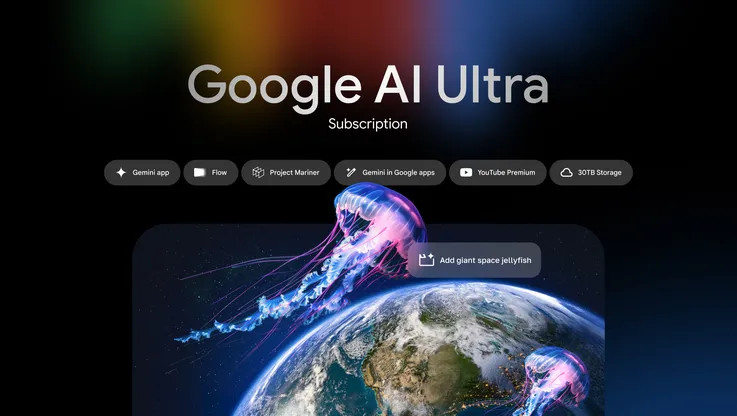

Google AI Ultra is the new premium, catch-all subscription to the company's AI services

The list of Google’s new announcements was especially long this year. Google’s Gemini AI became a little more ubiquitous and a little more pricey, as the top tier version of the system, Google AI Ultra, bundles the cutting edge of the technology into a $250 monthly package that includes generative video (Flow, capable of sating the desire for Guy Ritchie/Grand Theft Auto mash-ups running at a seamless 40 clichés per second), the Whisk visualiser, a new research assistant, Project Mariner, and a subscription to YouTube Premium thrown in. Presumably so you can use the service without having to sit through a slew of AI-generated ads.

Google's Project Mariner is 'research prototype exploring the future of human-agent interaction'

For AI watchers, the most significant announcement of the week was the arrival of the fully fledged AI Mode, first in the US but rolling out to other markets in due course. AI Mode effectively splices AI-generated responses into search, allowing users to make conversational enquiries either via text or speech and then collating contextually relevant results that can be queried and refined.

Google wants to be at the very heart of your life

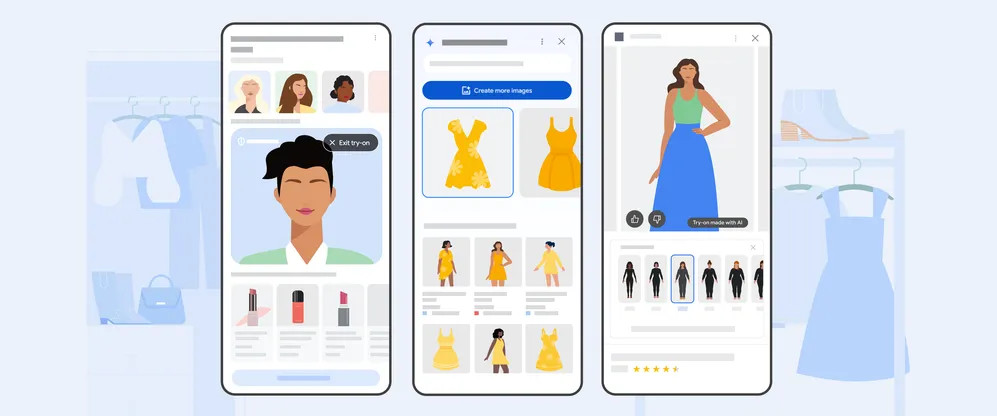

Shopping and searching with AI

In practice, this is how many people already use search engines – asking a question – but AI Mode is about creating a set of queries and responses that flows naturally. Think something along the lines of ‘can you remind me how much the set lunch was at that restaurant I found yesterday’, or some such.

Caveats? It’s too early to tell, but given the slow degradation of ‘traditional’ search via the incursion of first sponsored results and latterly AI-generated summaries, it’s not hard to see how AI Mode could quickly become the archetypal ‘mid’ experience, guiding us all on invisible rails to businesses and services that just happen to be inextricably commercially co-dependent on the big G.

Receive our daily digest of inspiration, escapism and design stories from around the world direct to your inbox.

AI Mode's virtual try on service

Naturally, AI Mode will also become the go-to portal for Google’s shopping experience. Google claims to have no fewer than 50 billion product listings on its systems, refreshing two billion of them every hour for accurate price and availability data. In an attempt to make this avalanche of choice feel less overwhelming, AI Mode debuts a couple of new features, including the ability to ‘virtually try on clothes’ using an AI that mimics the drape and fall of clothing across a wide variety of body types. Or you can go one stage further (for US customers only right now) and upload a full-length photograph of yourself to use as the basis for the virtual clothes horse system.

AI Mode promises an 'immersive' shopping experience

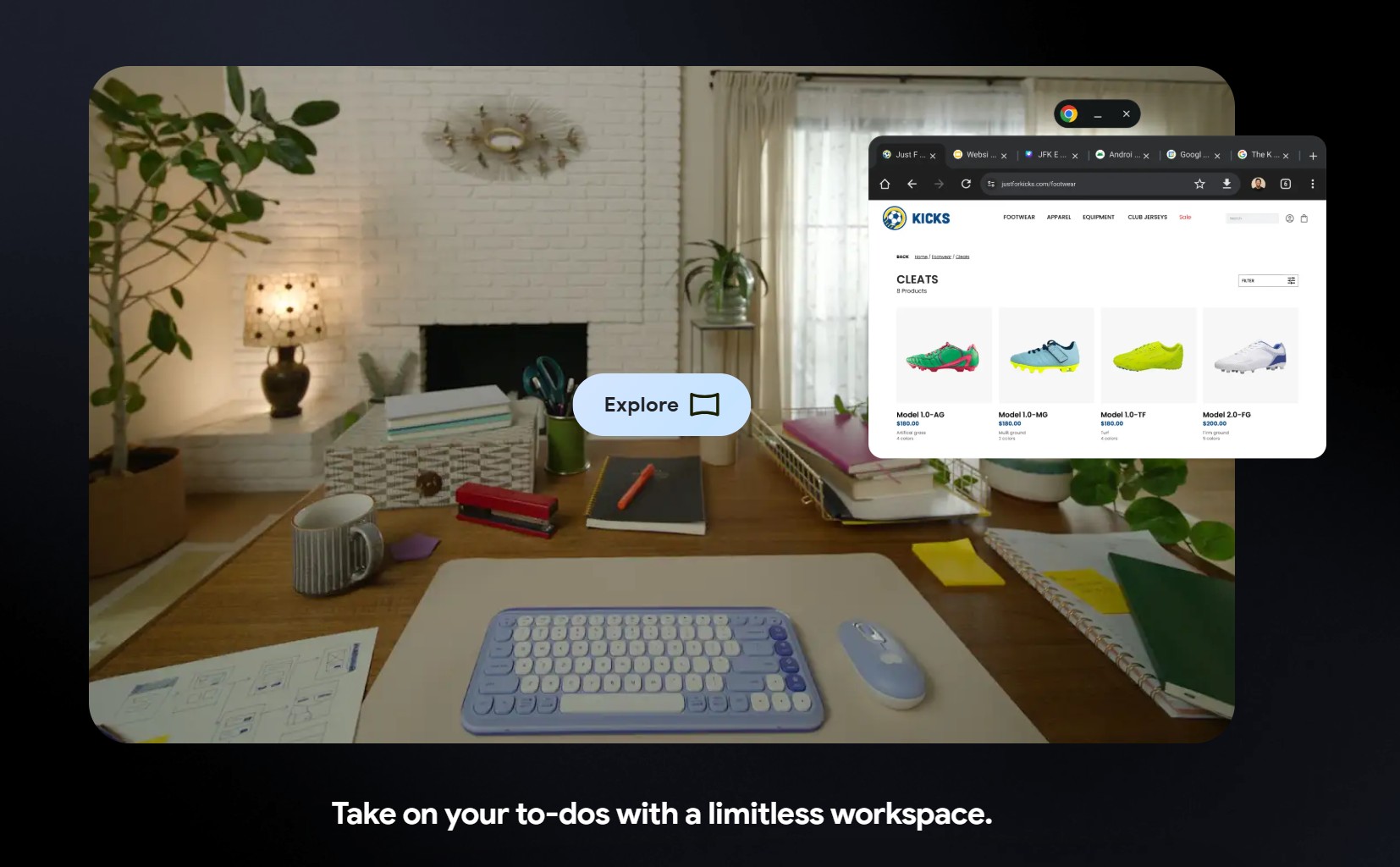

In other news, there was a renewed play for the AR/VR space with the arrival of Android XR, an ‘AI-powered operating system’ designed for augmented reality glasses. Capable of overlaying information, from navigation to how-to guides, to text messages and search, onto the world around you, Android XR will be appeal to a certain kind of early adopter, for sure, but is more likely to find its niche in industrial and commercial applications.

Android XR, an augmented layer of information accessed via AR glasses

A new look and feel

Google is heralding Material 3 Expressive as one of its ‘biggest update in years’. For Wear OS 6 devices, that means making more of the circular interface, with scaling animations that appear to emphasise and exaggerate the ‘water droplet’ design of the display. Animations add a further sense of depth.

Wear OS updates include more dynamic animations

On Android devices, the Material 3 Expressive toolkit takes a similarly fluid and organic approach to animations and haptic feedback. Developers now have access to a revised palette and typography as well as having more freedom when creating shaped buttons and interfaces. The many flavours of Android give the OS an untethered, slightly anarchic feel that feels a world apart from Apple’s rigorous rules and high aesthetic standards. Google clearly hopes that a more expansive set of highly curated options will help close the gap.

Wear OS notifications, showing the relationship between text box and curved screen

Android 16 should start rolling out in the next few weeks, initially to Google’s own flagship devices. It also means that when the next tranche of Pixel devices drop – the tenth generation – they’ll come with Android 16 straight out of the box. There’s a long list of enhancements and new features, beyond the new aesthetic, including a Live Update tracker for deliveries, cabs, etc, new camera functionality, more Gemini integration, as well as many behind-the-scenes updates to security and information sharing. Better and more intuitive desktop mirroring is also coming, allowing you to use your Android phone as a portable minicomputer when paired with a suitable screen and keyboard.

Material 3 Expressive comes with a new range of palettes

Google describes all these enhancements as a way of making watch and phone more ‘fluid, personal and glanceable,’ but this feels like sleight of hand, because the whole business model of a modern device is not about glancing but about glueing. With every launch, conference, update and overhaul, the tech giants are simply putting more and more power into these devices of mass distraction, while simultaneously promising hacks and workarounds that purport to make our lives easier and more seamless and less easily diverted by the fast-flowing streams of information. I/O is an especially apt name for an event of this nature, for the ins and outs of digital culture have never felt more imbalanced and precarious.

Material 3 Expressive is designed to offer far greater customisation for users and developers

The internet shifts up a gear

Unfortunately, all the things that make the web bad are still bad even if the interface is shiny, beautiful and new – UI changes can’t cloak the essential emptiness of the experience, just as a glossy AI-generated image captures the attention for a few seconds until its dark, lifeless core is revealed. In that respect, Android 16’s aesthetic improvements are merely a band-aid over a realm of chaos, patching security, guiding choices, and maintaining a grip on the essential tools needed to navigate the modern world, all the while encouraging ever greater user involvement and data commitment.

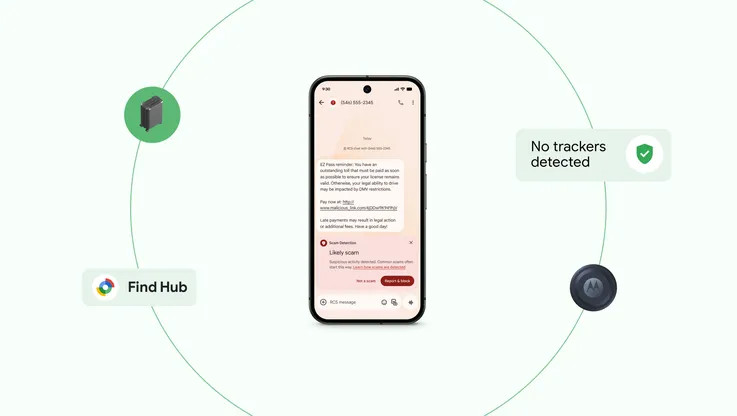

Safety and security were big themes at I/O 2025

On top of all of this, AI is simultaneously invisible and everywhere. AI Mode is just one more step on the road to its the inevitable and unstoppable integration with search. If Wi-Fi is considered an essential service, like water or electricity, then isn’t the ability to search for information an extension of that service? Commercialising search is like plumbing in an apartment and then serving up soda water or an influencer’s energy drink through the taps, depending on who has paid for the placement. It remains to be seen whether greater AI integration will be able to undo this or simply accelerate it.

Google Whisk is an AI image generator

To the naysayers, AI has already been derailed by commercial concerns, trumping safety and ethics and copyright and all manner of the apparently outmoded but once essential ways we used to conduct ourselves. There are still pockets of opposition holding out. The idea that generative AI is already beyond parody still holds sway for now. Thus far, the vast majority of AI art, music, writing and films has achieved the status of nothing more than a sophisticated, rather more egregious type of photocopying, plagiarism in its purest form.

A graphic depiction of an Android XR workspace

However, as a new generation of creatives grows up with a technology that’s maturing at a terrifying rate, it’s unlikely this will hold true for much longer. There are already plenty of prompt jockeys out there capable of cajoling the machine into creating something interesting. A generation that can find therapeutic solace in the ‘thoughts’ of an AI chatbot isn’t less discerning, just less concerned with the distinction.

So what next?

For now, our devices and desires are inextricably linked, a situation that Google’s raft of changes seeks to cement. But in the future, will conventional screen-based devices still be at the centre of our world? As the annual bipartisan announcement techfest got underway, cleverly sandwiched between Google's I/O conference and Apple's WWDC, Sam Altman's OpenAI quietly let slip that it was working with none other than Jony Ive. And not just Ive and his LoveFrom cohort, but a team of crack technologists, including alumni from Apple, who have formed a specialist start-up, io, to exclusively shape OpenAI's future move into personal devices. Altman isn't just employing io, but owns it outright, although Ive's hard-won independence will remain.

Press play and join the machine

It’s inconceivable that Apple, Google, et al, don’t have teams hard at work on a similar paradigm-busting approach to the way we communicate, consume and create. Just as screen-based reaches its apogee, the digital world could suddenly shift in ways we can’t yet imagine.

Google I/O 2025 can be explored in full at IO.Google

Jonathan Bell has written for Wallpaper* magazine since 1999, covering everything from architecture and transport design to books, tech and graphic design. He is now the magazine’s Transport and Technology Editor. Jonathan has written and edited 15 books, including Concept Car Design, 21st Century House, and The New Modern House. He is also the host of Wallpaper’s first podcast.